TransUnion LLC, one of the three major credit reporting companies in the United States, also has branches in every continent but Antartica. It is said that just in the U.S. the personal information and credit histories of some 200 million consumers is stored in their servers; I have not been able to find veritable information regarding consumers located outside the US.

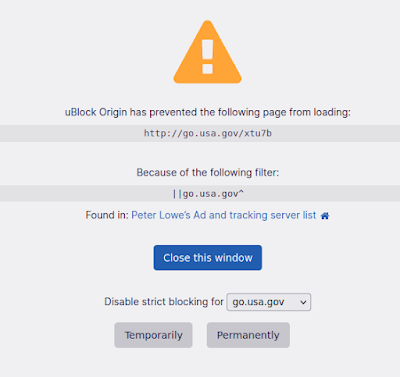

Some of you may remember that TransUnion recently suffered a data breach (I will be using the GDPR definition of personal data breach which is "a breach of security leading to the accidental or unlawful destruction, loss, alteration, unauthorised disclosure of, or access to, personal data transmitted, stored or otherwise processed").

"How recently," comes the voice from the back of the room, "which one are you talking about?"

Good question; it is hard to keep track of them. Let's go over a few of them and later see what we can learn from them.

The Events

- In 2005 -- a long time ago (in dog years) -- TransUnion lost a laptop containing personal data from more than 3600 US consumers. The Chicago-based company offered up to one year of free credit reports to the affected customers. At the time -- one must remember these were pre GDPR/CCPA/BIPA times -- some of the main questions raised were

- Were credentials to access the TransUnion databases and other systems also exposed?

- TransUnion chose to report the data breach. At the time there was no real requirement to do so: the California Senate Bill 1386 of 2002, one of the first security breach notification laws, specified a criteria corporations should use to determine whether they were required to report the incident: if they answered "yes" to every single one of the following questions, they must report the breach:

- Does their data include "personal information" as defined by the statute?

- Does that "personal information" relate to a California resident?

- Was the "personal information" unencrypted?

- Was there a "breach of the security" of the data as defined by the statute?

- Was the "personal information" acquired, or is reasonably believed to have been acquired, by an unauthorized person?

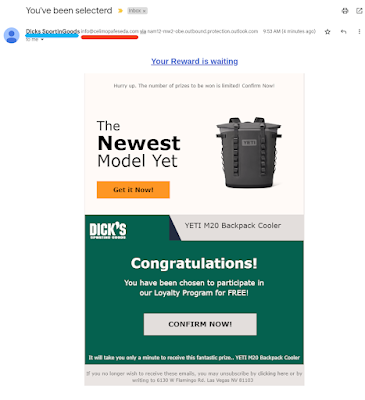

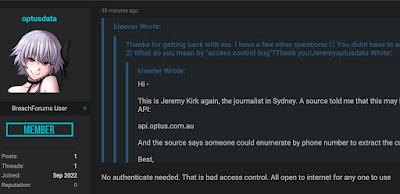

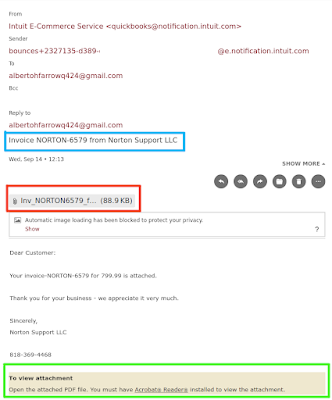

So TransUnion is very popular this month, this time in due to a larger issue than possibly being used to send phishign emails.

- During the Summer of 2019, the personal data of some 37000 Canadians being held in TransUnion servers were compromised. Note that the Canadian Digital Privacy Act, which ammended PIPEDA and provided mandatory breach notification requirements, had become law 4 years earlier. Also, GDPR and CCPA had already become law.

- On March 12, 2022 ITWeb broke the story of a data breach, which caused TransUnion to admit that attackers had indeed stole 28 million credit records. At first it was believed that more than 3 million South Africans and businesses such as Mazda, Westbank, and Gumtree were affected. The Brazilian group who claims responsibility for this act, "N4ughtysecTU," state it gained access due to a poorly secured (password "Password") TransUnion SFTP server. TransUnion later stated that more than 5 million consumers were actually affected and once again offered a period of free credit reports to the affected customers.

- On November 7, 2022 it reported to the Massachusetts Attorney General about a data breach that could involve 200 million files profiling nearly every credit-active consumer in the United States. On the same day, TransUnion also sent out data breach letters to all individuals whose information it believes was compromised. As this is still developing, the true impact is yet to be learned.

OK, I will stop here. If they had another data breach between Nov 7 2022 and the time this was published, it should not affect the point of this article.

The Outcomes

According to GDPR Recital 75, a personal data adverse effects to a person (individual) include loss of control over their personal data, limitation of their rights, discrimination, identity theft or fraud, financial loss, unauthorised reversal of pseudonymisation, damage to reputation, and loss of confidentiality of personal data protected by professional secrecy. So, if TranUnion was an European company or people living in the European Economical Area (EEA) were affected by this personal data breach, as the data controller it would have to submit the Personal Data Breach Notification to the Supervisory Authority should be done within 72 hours unless there is no risk to the freedom and rights of a data subject. In this case, they better be reporting. The next step would be to inform all those who were possibly affected about what happened, what are the consequences to their customers, and what TransUnion is doing about it. Of course, those affected should be expected to file complains with their regional Supervisory Authorities (Art 77).

In The United States things are a bit different. U.S. Supreme Court’s 2021 decision in TransUnion LLC v. Ramirez stated that only those that can show concrete harm have standing to seek damages against private defendants. How will victims of a personal data breach prove their personal information was stolen and disclosed by negligence of the company holding this data, and as a result a violation of American consumer protection and privacy laws such as California Consumer Privacy Act (CCPA) and the Illinois Biometric Information Privacy Act (BIPA)? Compare that with GDPR already mentioned article 77 and recital 141, which requires only the data subject (i.e. the victim in this case) considers that his or her rights are infringed or "supervisory authority does not act on a complaint, partially or wholly rejects or dismisses a complaint or does not act where such action is necessary to protect the rights of the data subject."

With that said, it is possible that will change. Given that the US government and the European Union are currently actively working together to establish a new EU-US data flow deal (PrivacyShield 2?), one must wonder how they will balance this Supreme Court decision with GDPR. Which one will have precedence?

Fun Facts

- I started this article mentioning the phishing campaign they were possibly being used to launch. What if that is related to this data breach? I mean, if your attack has been successful and you are already in the final (Actions on Objective) stage of the cyber kill chain and taking your time to hoover the victim's data, why not see what else you can do while there to pass time?

- In addition to its main line of business, it also offer services to help companies "protect and restore consumer confidence" after a data breach (they do not list an office address there). In fact, they title themselves as the "One-Stop-Shop Incident Response Solution."

- I made those round images representing the 3 regulations mentioned here because I did not have an interesting image to put in this article. They turned out nice, so expect me to make more and use them in future posts. You have been warned!