Full disclosure: I put Optus on the title because those LinkedIn articles avocate the need for clickbait to attact viewers. Problem it, I am actually going to talk about this company. But, this article is really about code development gone bad; Optus just happens to be the perfect example of, in the words of Jeremy Clarkson, what could possibly go wrong.

We are Agile!

In earlier, simpler times, the recommended software development lifecycle model (SDLC for you acronym addicts) was the Waterfall Model. There are many places which describe it better than I could ever do, so suffice to say that it is linear and starts with the idea, then goes to the design, and then a few steps including coding and testing until it is deployed and goes to the maintenance mode. In other words, you start with an idea and then ends up with a product.

Making the code secure, or implementing (Buzzword time!) privacy by design was fairly easy if the security and privacy team was involved fromt he get going, as that was just another well-defined step.

But, what if the product needs to be changed? As in not just a patch but feature request or something that requires a new library or user interface redesign. You need to go back to the start.

You can say it is a bit rigid, and many people agreed with you. Next step was modifying the model so you could hop back one step or two, and that started to get messy. The bottom line is it does not take changes well. In many fields that is completly fine. However, for code which is always changing and put into production as soon as changes are done, like in a website, it can slow down delivering a working product. In some industries, who puts it out first, even if it is not perfect, wins. So, we need something better.

We evolved into the Agile model, which. as a friend taught me, is also called the "Never Finished Model." What the joke implies is that this model is designed to handle changes quickly and deliver a working product even if it is not perfect. The reason is that you can improve on it later once you have some feedback from customers.

The following picture shows a typical Continuous Integration/Continuous Deployment (CI/CD) pipeline, which is a trademark of using the Agile model in code development. How do we account for security and privacy here? DevSecOps places security controls in the CI/CD process of DevOps. Note the two red boxes: they are the points where we add security testing to the cycle. one is for the Static Application Security Testing (SAST) and Dynamic Application Security Testing (DAST). The red arrows indicate that funny business they find is then send to something which then logs and reports them by creating tickets or sending emails or something else. This is of course, ideally supposed to be done in conjunction with training developers in secure coding, (Buzzword Alert!) pivacy by design, and whatnot.

In reality, some companies/developers which should know better decide that slows them down and hampers their style. In other words, they nee to be putting new code out with new features, and privacy and security are not features but

Enter the Optus

Singtel Optus Pty Limited, a.k.a. Optus is the second largest wireless carrier in Australia. In the last week of September 2022, Optus reported that on 22 September 2022 it was victim of a very sophisticated cyberattack by members of a criminal or state-sponsored organization. This attack resulted in a major personal data breach, where the names, dates of birth, phone numbers, email addresses, street addresses, drivers licences, and passport numbers of both current and former customers was leaked. Optus chief executive Kelly Bayer Rosmarin said that they "are not aware of customers having suffered any harm."

Insert here the videos of a guy in a hoodie in a dark room and computer screens showing random Linux output.

What does this very sophisticated cyberattack have to do with coding?

Glad you asked.

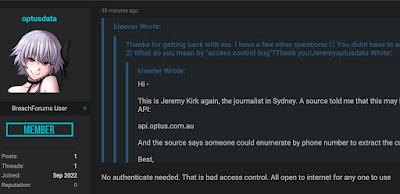

You see, later on it was found Optus had an unauthenticated API, http://api.www.optus.com.au, that released all of the personal data it stored, not only of current but also previous customers (there is the case of someone who has not been an Opus customer for the last 14 years and not only received an email from them about the breach but also started to be flooded with spam). We are talking about data from 10 million people. Unencrypted.

Optus detected the event when the attacker started hitting the AIP hard.

So, the questions are

- Why did it have an exposed API without some kind of authentication? Perhaps that was originally done to allow testing of the API more convenient by developers. I myself have seen that in the wild. When developers/DevOps from the environment in question were asked to at least limit access to a network only reacheable from behind their firewall, they shrugged it off saying the VPN (which is not a solution but sure is an improvement) was too cumbersome to use from their personal laptops.

- Why was the connection to said exposed API unencrypted? Do you remember when we said that DevSecOps places security controls in the CI/CD process? That probably would have caught that: the SAST would have noticed the unencrypted connections in the code; the ones I have used before would bark at unencrypted traffic (and hardcoded passwords, which was not the case here since no passwords were used). In the real world that does not happen as much as people believe. In fact, it is too common to hear that devsecops slows down the of devops' work.

- Why was the personal data stored unencrypted? Once again, convenience. Maybe when that was recommended, it was then turned down because developers argued it would slow the response time of the system. Once again, SAST would have caught that.

Clearly there were poor security practices at play here. Perhaps DevOps security and privacy training never happened, or SAST/DAST was never implemented in the SDLC chain. Usually that happens because they are considered cost centers in business that, as mentioned earlier, slow down progress. Remember we mentioned that automated security testing will create tickets developers will have to deal with in addition to the other tickets they already have on their plates.

Post Morten

Don't be that guy!

- Privacy by design would not have allowed this kind of code to even make into the repo.

- Encrypt the traffic to the API, period. Ideally that should be done at the API level. I know some people will put a Nginx proxy in front of the unencrypted API (using kubernetes or docker), and I cringe about that: it is an improvement from the Optus setup but not by much.

- Encrypt your data at rest. Yes, that is specially important for personal data, but it is a good habit regardless.

- All connections to an API should be authenticated by default. If you have a query, say list status, you want to make available unauthorized to users, spend some serious time thinking on the consequences.

- Ensure your CI/CD process has proper security controls. If DevOps is being swamped with the tickets generated by these controls, this may either mean they need more security and private training, or the controls need better tuning, or the external code/libraries you rely on are not as well written as they should. That is how BadUSB and many of the IoT issues came into being.